In recent weeks, the ChatGPT hype has blown up my tech-heavy social feed. I follow many coders and content creators on TikTok and Twitter, and most of them are losing their minds over the disruption that OpenAI's ChatGPT represents to their disciplines. Their common refrain: "This changes everything." After trying it myself (more on that later) and reading more about it, I think that I share their view.

As someone who follows technology and AI topics, I should not have been surprised by this preview launch of the ChatGPT project. For many months my news feed has been littered with topics about AI tools like GPT-3, GitHub Copilot, DALL-E, Stable Diffusion and more. But up until now, these technologies required a certain amount of skill and hardware to configure, and that confined them to the realm of the technologist or savvy enthusiast. ChatGPT puts this technology in the hands of anyone who wants to create a free user account on the service. This unprecedented ease of access catapults it into everyone's imagination, including mine, and I'll admit that I didn't see it coming this soon.

Learn more: Generative AI: What it is and why it mattersExplaining the unexplainable AI

In a way, the popularity of ChatGPT makes it easier for me to tell my friends and family what we do at SAS. As an AI company, we trade in the application of machine algorithms to business challenges and world-changing initiatives. As I tell this to my wife's Aunt Susan, she nods her head and says, "Ohhh," even as her eyes glaze over. But when I say, "For example, we use natural language processing and reinforcement learning to build expert systems that can improve outcomes – you know, like in ChatGPT," suddenly we have a shared understanding (to a point).

The people that I talk to feel one of two ways about ChatGPT:

- Excited and optimistic: This includes students and new coders, who can't wait to use a tool like this to bootstrap their "mundane" tasks like essay writing, programming, ideating and more. These folks aren't setting out to "cheat using AI," but they see this as a tool to get their projects started. It's better than opening an empty document or code file and starting from scratch.

- Horrified and intimidated: This includes professional writers and content creators, as well as veteran programmers. They recognize that the results from ChatGPT are often incorrect or incomplete, and potentially infringe on the intellectual property of other creators with no way trace it back or cite sources. While ChatGPT's failings may be comforting ("it can't do my job! yet!"), the fear is that many users will accept the results as "good enough," and thus dilute the craft that we've dedicated our careers to.

GPT-3 – the large language model behind ChatGPT – was trained on a wide variety of published materials, including books (fiction and non-fiction), web pages, social media, and scientific journals. The model incorporates an astronomical number of pathways that allow it to predict a good answer from almost any prompt. However, it cannot cite sources or explain how it arrived at that answer. This makes the model vulnerable to (unintentionally) plagiarizing another source or (of course) presenting an answer that is incorrect or biased by some hidden parameters.

Think of it this way: when you use a calculator or a computer program code to calculate "2 + 2", the machine performs arithmetic by adjusting bits in a memory register to arrive at the correct sum of 4. When you ask a large language model like GPT-3, "What's 2+2," it predicts – with a high degree of confidence – that the answer is 4. In this way it works more like your own brain, which can come up with quick answers that are a result of all of the training and reinforcement that life has brought your way. It's only when you're asked to "show your work" that you have to actually do the math and demonstrate your skill.

What does ChatGPT mean for self-service support?

Of course, one of the first things I did with my new ChatGPT account was ask it to solve some SAS questions. My team manages SAS Support Communities, the largest repository of peer-to-peer knowledge among SAS practitioners. I want to know if this AI is gunning for my job.

For all I know, our community site content was used to train the GPT-3 model! Our friends at StackOverflow, the world's largest general-purpose programming Q&A site, took the extraordinary step to ban GPT-generated answers from their forums. We have not done this on the SAS Community because we don't yet see people supplying AI-generated answers. (But this begs the question...how could we tell?)

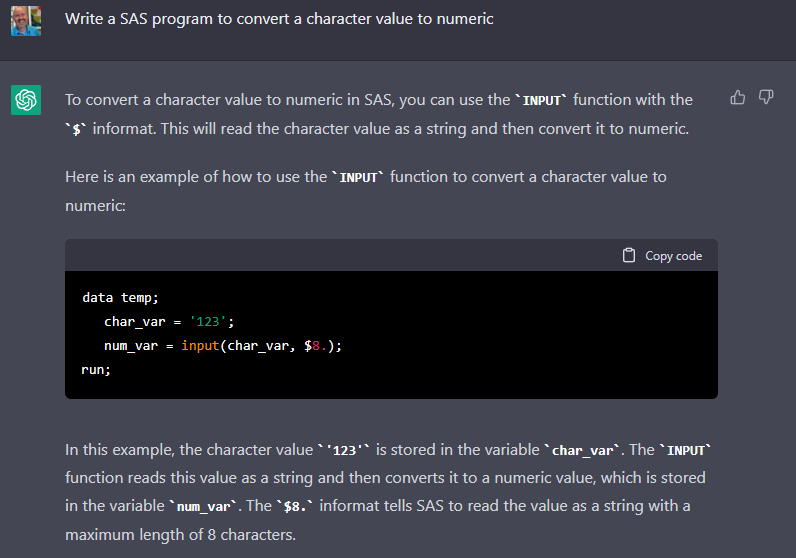

The first question I tried was the most popular SAS topic we see from beginners: "how do I convert a character value to numeric?"

ChatGPT did a nice job here. It explained the INPUT function and the role of informats to read a value as a number. Others might find some fault with it, but I found this answer to be acceptable as help for a beginner.

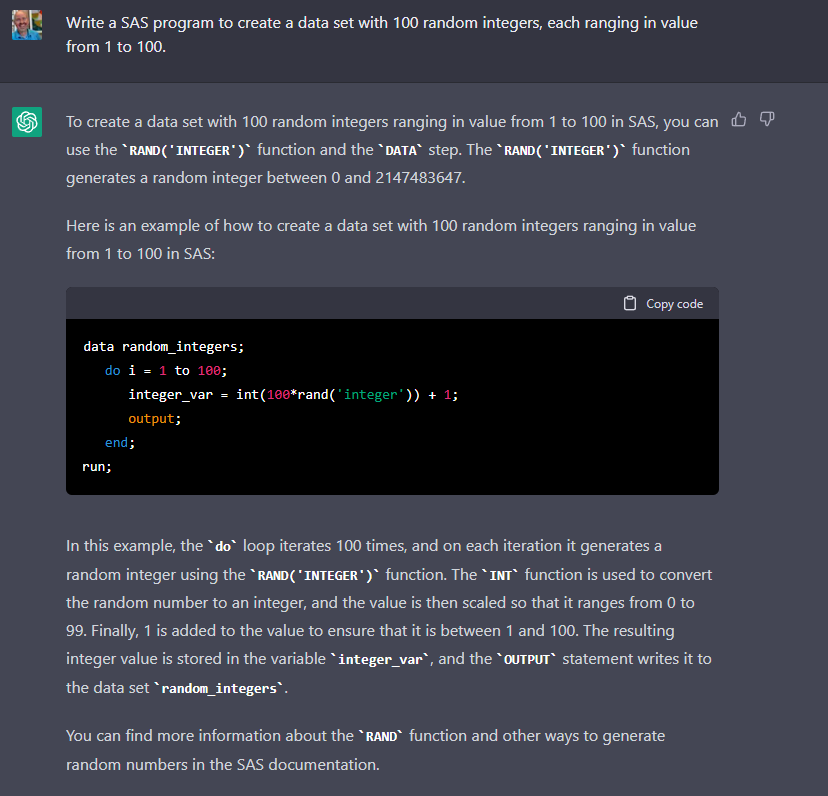

My next topic, a simulated homework assignment, produced a mixed result: "Write a SAS program to create a data set with 100 random integers, each ranging in value from 1 to 100."

I was impressed that the model wrote a program that uses the RAND() function with the 'integer' method, as that is a relatively recent technique that was introduced in SAS 9.4. Unfortunately, the model got the syntax wrong so the code won't run as-is -- the RAND() function needs more arguments. Whew! This leaves at least something for the student to figure out themselves.

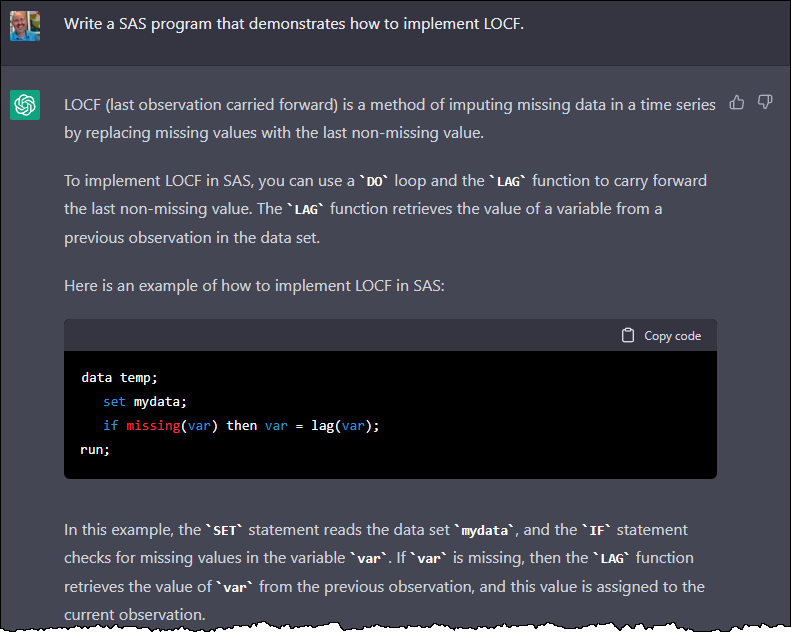

For my final example I asked a question that contained some jargon: "Write a SAS program that demonstrates how to implement LOCF."

Good job explaining LOCF! "LOCF (last observation carried forward) is a method of imputing missing data in a time series by replacing missing values with the last non-missing value." And many SAS coders try the LAG function, as ChatGPT did here, as a way to achieve the goal. Unfortunately, like many humans, the model fell into the trap of thinking the LAG function simply "looks back" at a previous record.

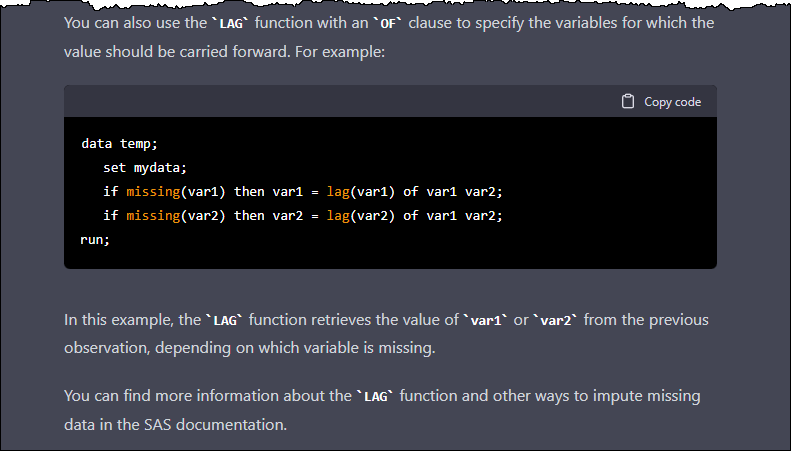

These errors are understandable...but then the model got cheeky and offered more details than I asked for. And it got more wrong.

This code demonstrates multiple LAG functions and tries to apply the technique to multiple variables using the OF keyword to specify a range of variables. I understand all of the words that "are coming out of its mouth" but they don't make sense here. It reminds me of that chatty person at a party who tries a little too hard to show you what they know, but you know they should have quit while they were ahead.

We're all training the algorithm

I'm not trying to criticize ChatGPT by picking apart its answers to technical questions. The ChatGPT team intentionally allowed the model to generate answers that might be incorrect. If they had squelched these by tuning it toward more certainty, then it wouldn't attempt to answer nearly as many questions. Despite its flaws I think it's an amazing step forward. I also know that it's only going to get better.

By using ChatGPT (as millions have), we're all working to train the algorithm. It relies on reinforcement learning from human feedback (RLHF), which means that it learns when we rate its responses (thumbs up or down). And rumors are that the next generation of the model, GPT-4, will be trained on much more data and that it's coming soon.

More chat about ChatGPT

If you would like to learn more about GPT-3 and ChatGPT, I recommend this podcast from the team at Hard Fork.

And here's some deep reading from OpenAI: Forecasting Potential Misuses of Language Models for Disinformation Campaigns—and How to Reduce Risk. Clearly, they know that this tech has great potential but also presents some danger.

Some of our SAS users have had fun with the tool: A rhyming poem about SAS vs. R. And here's one that creates a graph using SAS and then Python. (Proud to say the SAS version was more concise and easier to read...)

You can also download this e-book about natural language processing.

6 Comments

Thanks Chris! What a fun read!

Thanks for sharing your thoughts on Chat-GPT! Agree that RLHF is critical for the success of any AI Tool.

Hi Chris. Not working right now - but you can google and get the chat cpg results shown simultaneously , commenting about your point that chat cpg cannot show sources .https://chrome.google.com/webstore/detail/chatgpt-for-search-engine/feeonheemodpkdckaljcjogdncpiiban

Interesting! But it seems like that just presents search results alongside ChatGPT answers -- but it's not an indication of where ChatGPT "learned" the information in its response.

Great read Chris! Very enjoyable and educational at the same time. Well done 🙂

For completeness, either of the following statements will generate a random integer between 1 and 100. ChatGPT seems to have conflated the two methods:

i1 = rand('integer', 1, 100);

i2 = ceil(100*rand('uniform'));

If you use the CEIL function instead of the INT function, you do not need to add 1 to the RAND("UNIFORM") call.